- Home

- Our vision

- Our values

- Members

- Access

- Blog

- Contact

- Language

The first entry is here.

The second entry is here.

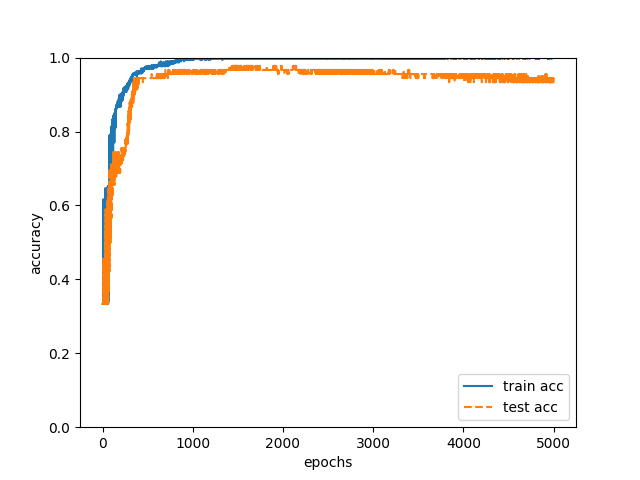

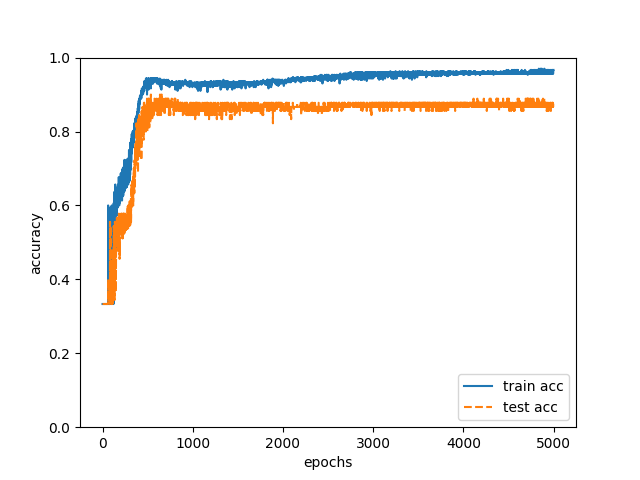

In the 1st and 2nd entries, we showed that the authors of the specification could be estimated with high precision in the neural network. However, in the 1st and 2nd we only changed the input data. In the development of the neural network, the structure of the neural network is considered to be a big theme. When we develop the neural network, the structure of the neural network such as the number of layers and the number of nodes will be the subject of consideration first. But as is well known, it is important to change the initial value and use various optimization methods (The first and the second were all SGD). Various methods are conceivable, but first of all it is possible to make the layer deep. Since there are only 18 items (the number of input nodes) in this development, it can be anticipated that a large number of layers will not be required.

For the time being, we learned the hidden layer as two layers (13 nodes, 8 nodes) by using training data and test data of “Both habit of document expression and Habit of flow of logic”. The result is 98% correct answer rate. About 90 cases of test data it means 2 incorrect answers. It is a very high accuracy rate. For this matter, we can say that a deep neural network is not necessary. It is understandable. We have too few input nodes. The sample was inappropriate to simulate the development of the deep neural network. Well, even though the sample was inappropriate, I think that the high accuracy rate is accomplished because the input data was suitable for estimation of the author.

Initially, I wanted to improve the rate of correct answers gradually while using modern technic like a self-encoder (ten years have passed since self-encoder became a topic). In addition, not only simple neural networks, but also the complicated structure of the network, such as inputting the output of one node to the other node in the same layer, there are various ingenuity in the structure of the network. However, judging from the results so far, it seems that there is not enough sample to be able to judge the structure of the neural network and the usefulness of various techniques in the current sample. Although there may be room for further improvement, even if the correct answer rate becomes 100%, I cannot conclude the reason of high accuracy rate is achieved by the structure change of the neural network. It can be just a coincidence. Therefore, I would like to try development with a self-encoder etc. in another project in the future (I think that it is good to experience the development with word 2vec and Q learning at present).

Now, since I first experienced the development process related to the neural network in the 1st to 3rd entries, I would like to think about the patenting of the invention which can occur in the development process next time.

The fourth entry is here.

The first entry is here.

In the first entry, we showed that we could estimate the specification authors with high precision in a simulated developed neural network. In the development process, the neural network learned based on the following three patterns of input data.

1. Habit of document expression.

2. Habit of flow of logic.

3. Both habit of document expression and Habit of flow of logic.

Below, I will concretely describe each pattern.

1. Habit of document expression.

This is the plan mentioned in the 1st entry. In this plan, the following 11 items were digitized for each specification before learning. Number of lines of main claim, number of characters of main claim, average number of lines of subclaims, average number of characters of subclaims, number of commas per line of claims, number of characters per line of claims, average number of commas per paragraph of the specification, average number of characters per paragraph of the specification, number of commas per specification, number of characters per specification, the number of characters of the specification.

Details of learning are as follows.

· Training data: 300 data correlating the numerical values of 11 items with the author.

· Test data: 90 data correlating the numerical values of 11 items and the author.

· Hidden layer: One layer with 7 nodes.

· Mini batch: about 10 to 50.

· Optimization method: stochastic gradient descent.

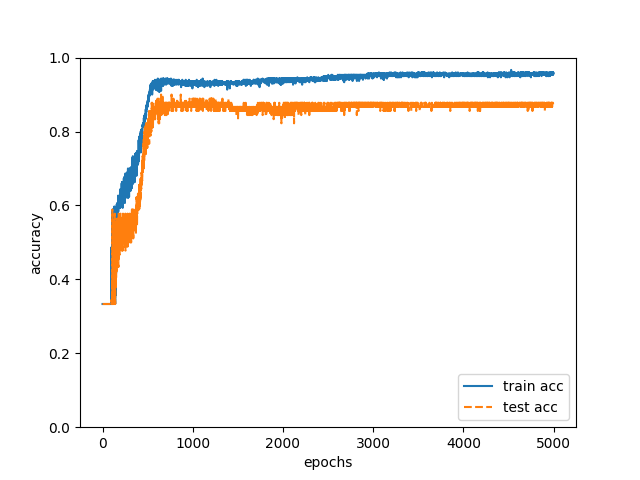

· Iteration: 50000 times. By doing the above learning with training data and estimating 90 authors of test data in the neural network after learning, the correct answer rate was 88% (Fig. 1).

Fig. 1

2. Habit of flow of logic.

In this plan, we measured the number of seven words below for each specification before learning.

“However”, “still”, “namely”, “also”, “further”, “could be “, “and”.

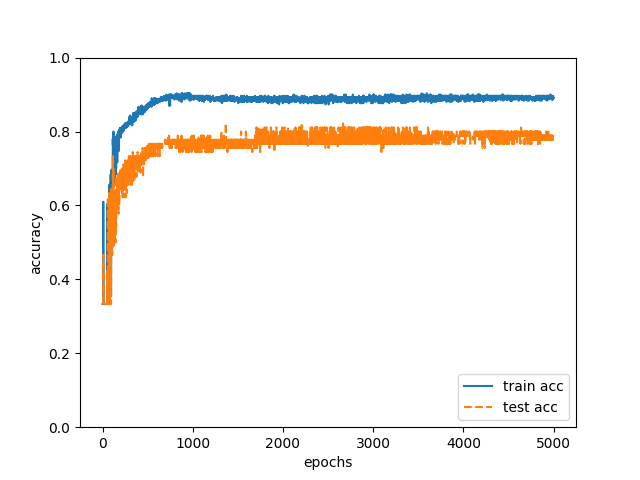

I often write sentences in the description in the order of “assertions, commentaries, arguments, and exemplification” in order to explain logically. When writing sentences in this order, “namely” appears before “commentary”. Since the habit of logical explanation differs for each author, I thought that the authors can be identified by statistically processing the words in the first part and the last part of the paragraph. The details of learning are the same as “1. Habit of document expression” (however, the number of nodes in the hidden layer is five). By doing the above learning with training data and estimating 90 authors of test data, the correct answer rate was 79% (Fig. 2).

Fig. 2

As described above, the correct answer rate of 79% was obtained when “1. Habit of document expression ” was used as input data, and the correct answer rate of 88% was obtained when “2. Habit of flow of logic” was used as input data. This result was different from my intuition, so I was a little puzzled. If I estimate authors by myself, I pay attention to logic flow more than the number of punctuation marks.

Although “2. Habit of flow of logic” may have disadvantage because the number of items is small, I can say that “1. Habit of document expression ” is effective to estimate the authors. Based on this result it is impossible to exclude the idea that “1. Habit of document expression” can be used as input data if seriously applying for a patent.

Furthermore, although the percentage of correct answers is somewhat reduced, the correct answer rate of 79% can be obtained even in “2. Habit on flow of logic “, so if you apply for a patent, applicant should not omit “2. Habit of flow of logic ” from claims.

A significant correct answer rate was obtained when one of “1. Habit of document expression” and “2. Habit of flow of logic” was used as input data, so it will be possible to estimate more accurately if both are set as input data.

Therefore, I let the neural network learn based on “3. Both habit of document expression and habit of flow of logic”. We created training data and test data by combining the input data used in “1. Habit of document expression” and “2. Habit of flow of logic”. The detail of learning is the same as “1. Habit of document expression” (nodes of the hidden layer are 11). We conducted the above training with training data and estimated the authors of 90 test data in the neural network after learning, the correct answer rate was 93% (Fig. 3). 84 of the 90 test data have been able to accurately estimate the author.

Fig. 3

It was proved to be effective that “1. Habit of document expression” “2. Habit of flow of logic” “3. Both habit of document expression and habit of flow of logic ” are very useful as input data for estimating the author of the specification. On the other hand, in developing neural networks, it is necessary to optimize the structure of the network. Next time we will report the result of changing the structure of the network.

The third entry is here.

The fourth entfy is here.

When I was a student, we had to find a good book first before starting study. But it took a long time before I came across a good book. Now we can find a good book as soon as you search, and you can read good information on the web without purchasing a book (thanks google). Many MOOC lectures at the university level are free, so people with motivation can learn as much as you like. Recently, I am concentrating on learning artificial intelligence related technology, but I feel that I could learn efficiently at very low cost by using the net. I would like to thank the net related technology.

Since I am a patent attorney, I am interested in patent rights of inventions, but I always think that we need to understand technology deeply first before thinking strategy of the rights. Therefore, I believe that deep understanding of technology is extremely important to me even for artificial intelligence related technology. For deep understanding, I thought that development was the best and tried to simulate the development of technology to achieve a specific purpose. Through simulated experiences of development, I aim to study the patenting strategy of artificial intelligence related technology. I got some results, so I will record the result of learning.

The outline of development is as follows.

· Target: Development of artificial intelligence that identifies the author of the patent specification from the features of the patent specification.

· Usage technology: Neural network.

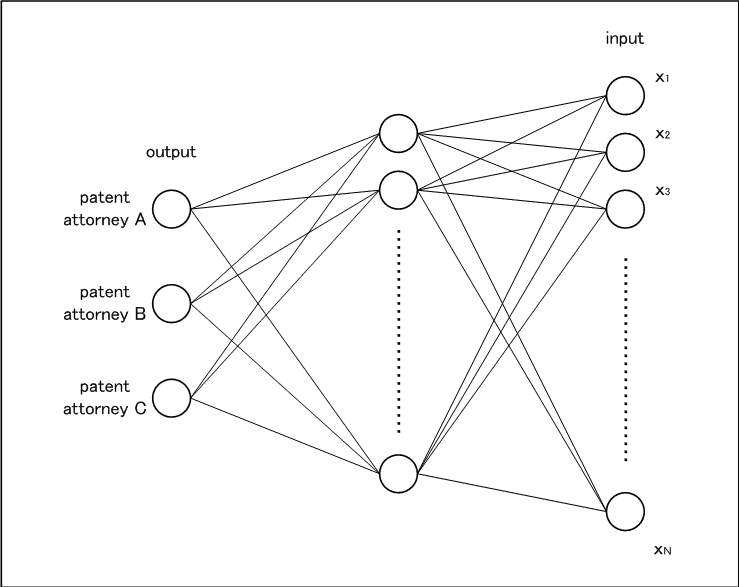

The neural network adopted is as shown in Figure 1 below.

Fig. 1

There are three patent attorneys in our office. Comparing the publications of the specifications written by each patent attorney, it seems that each specification has its own style. Therefore, if the analysis result of each patent attorney’s specification is used as input data and three nodes corresponding to patent attorneys’ A to C are used as output data, I thought that we could develop a neural network which inputs features of the specification and outputs the author. The output node is made to correspond to each patent attorney (the maximum value of the output of the node is the estimation result of the author). And it seems that we can develop a neural network which can obtain meaningful output by determining input data, and by choosing an appropriate neural network. First of all, we need to determine input data. In the meantime, the neural network is configured to have one hidden layer as shown in Fig. 1, and a number of nodes of the hidden layer are constructed by a middle number between the number of input nodes and the number of output nodes. I thought of 3 patterns of input data. 1. Habit of document expression. 2. Habit of flow of logic. 3. Both habit of document expression and habit of flow of logic. Since both features do not depend heavily on the technical field and appear more or less in all the specifications, if we can quantify these features, we will able to estimate the input data corresponding to the author can be created.

Once we have decided on such a development policy, the next is practice. We prepared 130 patent specifications written by each patent attorney. We convert these into text data, statistically process text data from multiple points of view, and use the multiple values obtained as input data. Through such preprocessing, we prepared 390 teacher data (pairs of input data of multiple nodes correspond to one of patent attorneys A to C).

This time we have 300 of training data and 90 of test data. From the training data, 50,000 iterations were performed using data representing habit of document expression, the accuracy of training data was 96% (train.acc), and the accuracy of test data was 88% (test.acc).

Fig. 2

88%! I am surprised. It is too much success, isn’t it? There is still much room for improvement, such as obvious over learning.

Normally when I read the specifications written by each patent attorney, I can presume that “this specification is likely written by patent attorney A” and so on. But it is surprising that a neural network can presume the author of specification with probability of about 90%. Surprisingly the habit of the author appears quite obviously in the specification. It is impossible for humans to estimate authors with this accuracy, right? I realized the potential of the neural network.

Well, as this entry becomes long, I will describe the concrete analysis next time.

The second entry is here.

The third entry is here.

The fourth entry is here.

· References.;

“Making Deep Learning from scratch” O’Reilly Japan. Yasuhiro Saito. The explanation was very easy to understand. Without this book I think that I could not get the result of this.

“Introductory programming for language research” Kaitaku-sha Yoshihiko Asao, Lee Je Ho.

“Deep.Learning”, https://www.udacity.com/course/deep-learning-ud 730. It is a lecture that gave me a chance to learn Deep Learning by myself.